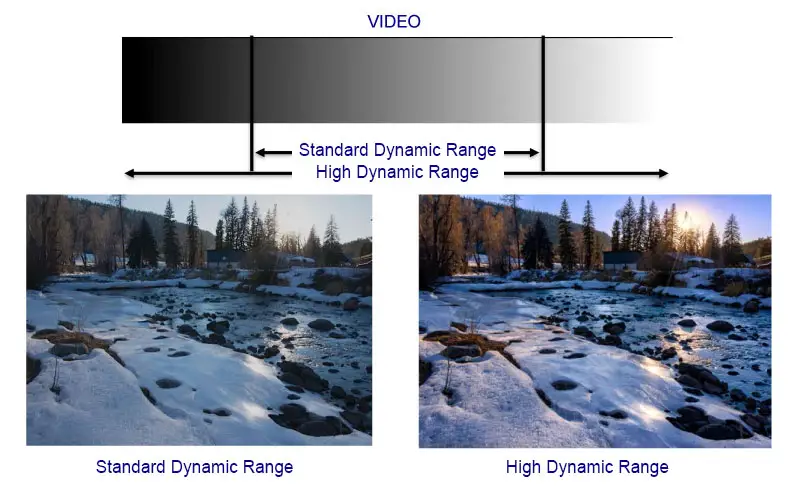

In 2016, the main novelty in TVs was called support for HDR (High Dynamic Range), which is video with an extended range of color depth and tone. HDR did not arise spontaneously. First, in 2010, there were developments to improve the quality of displays, and it became clear that soon, displays would be such a class that standard video would not be able to transmit content of the desired quality. So, how to improve the quality of content with additional HDR data was figured out. In 2016, QLED and OLED TVs capable of transmitting a billion shades (10-bit color depth) appeared, with HDR processing software built into them.

What you need to know about HDR

HDR is metadata embedded in codecs; this metadata is set when shooting video or generated when a game console creates a video stream. You may ask why you need metadata because the necessary data can be put into the codec immediately, so why add it? It’s straightforward. HDR is designed for displays that support 10-bit color depth; an 8-bit display displays 256 gradations of brightness, while a 10-bit display displays 1024 – 4 times more. If you make a video only for 10-bit displays, it will not display correctly on 8-bit displays. Also, codecs compress video to reduce file size, so some color-level sequences are set for a group of pixels rather than each one individually. Also, remember that when shooting a video, the quality depends very much on the scene’s lighting; for example, if you’re shooting a scene in which a desk lamp shines on a table in a dark room, the lamp will be fine at a low shutter speed. All other objects in the room will be invisible, and vice versa; if you are shooting high-sensitivity video, in this case, previously invisible details will become visible. The lamp will become a bright spot that will outshine neighboring objects.

This is why video is delivered in formats that any TV supports. You can watch video on any TV, even an old one from before the HDR era, but modern TVs support higher levels of color and contrast. These TVs can show higher-quality video, and they use metadata to improve video quality by adjusting brightness levels and making the picture more colorful.

Overview of the features of different HDR formats

| HDR Generation | Color Depth | Brightness (nits) | Metadata | Year Developed | Features |

|---|---|---|---|---|---|

| Dolby Vision | 12-bit | Up to 4000+ | Dynamic | 2014 | Supports 12-bit color depth, requires compatible hardware |

| HDR10 | 10-bit | Up to 1000 | Static | 2015 | Basic standard, one setting applied for the entire video |

| HLG (Hybrid Log-Gamma) | 10-bit | Depends on display | No metadata | 2016 | Designed for broadcasting, compatible with both SDR and HDR displays |

| Advanced HDR by Technicolor | 10-bit | Up to 1000 | Dynamic | 2016 | Offers flexibility in content production and broadcasting |

| HDR10+ | 10-bit | Up to 4000 | Dynamic | 2017 | Enhanced version of HDR10, adjusts brightness and contrast for each scene |

HDR Dolby Vision: the most advanced format, which is made with a vast reserve for the future, supports 12-bit displays; such displays do not exist yet, and brightness levels can be set down to the frame.

HDR10: a simplified format, HDR metadata is set once at the beginning of the video.

HLG (Hybrid Log-Gamma): HDR format without metadata, luminance levels are recorded when shooting as gamma curves, on playback the TV, depending on the quality of the display, reproduces the portion it can show, luminance levels that the TV cannot show are ignored, suitable for broadcast.

Advanced HDR by Technicolor: Technicolor has developed its broadcast-oriented format, which is inferior to Doby and HDR10+.

HDR10+: a format similar to HDR Dolby Vision, designed for displays with 10-bit color depth.

The two most common HDR formats in video creation are HDR10+ and Dolby Vision; generally, these two formats compete. In turn, TVs support multiple formats, and the supported formats depend on the TV manufacturer’s preference; for example, Samsung TVs do not support Dolby Vision, while LG TVs do.